Motivation Pulse-Check:

a fancy instrument or a call for action tool

Within the last decade, employee engagement and motivation have an undeniable impact on any human-related activities within an organization. It hastens the growth of a new market niche and an appearance of new players besides big reputed analytical and consulting companies. The pandemic challenged the tiptoeing around employee engagement and set an ardent trend of a permanent motivation pulse-check. The boom of pulse-checks offered by a variety of providers or designed internally entails that a lot of companies entwine this instrument with their regular HR-toolbox.

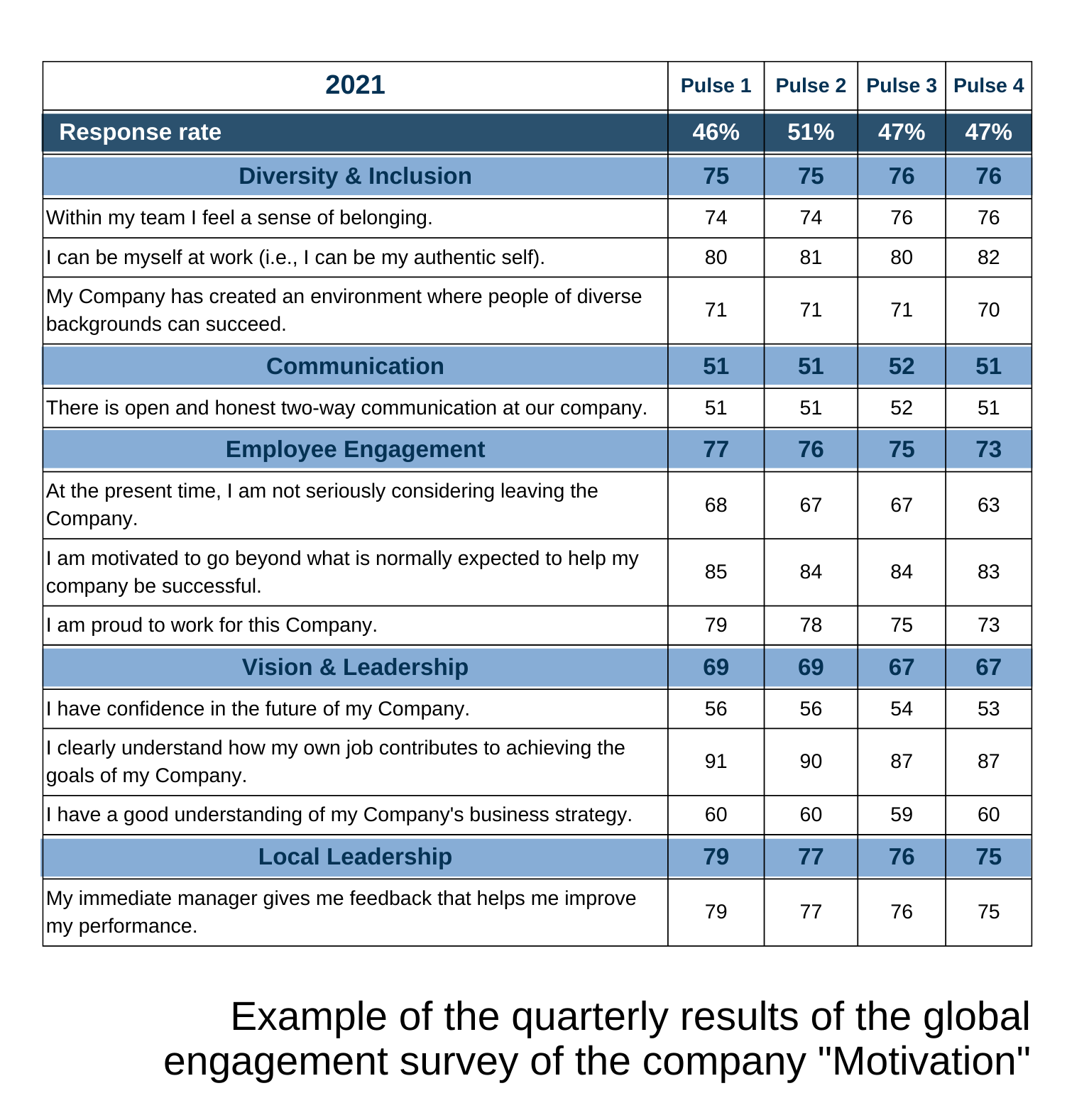

Recently I’ve interacted with an industrial mid-sized company (app. 3300 FTEs) and one of the discussed topics was a global employee engagement survey. Filled with pride the process owner showed me the following results:

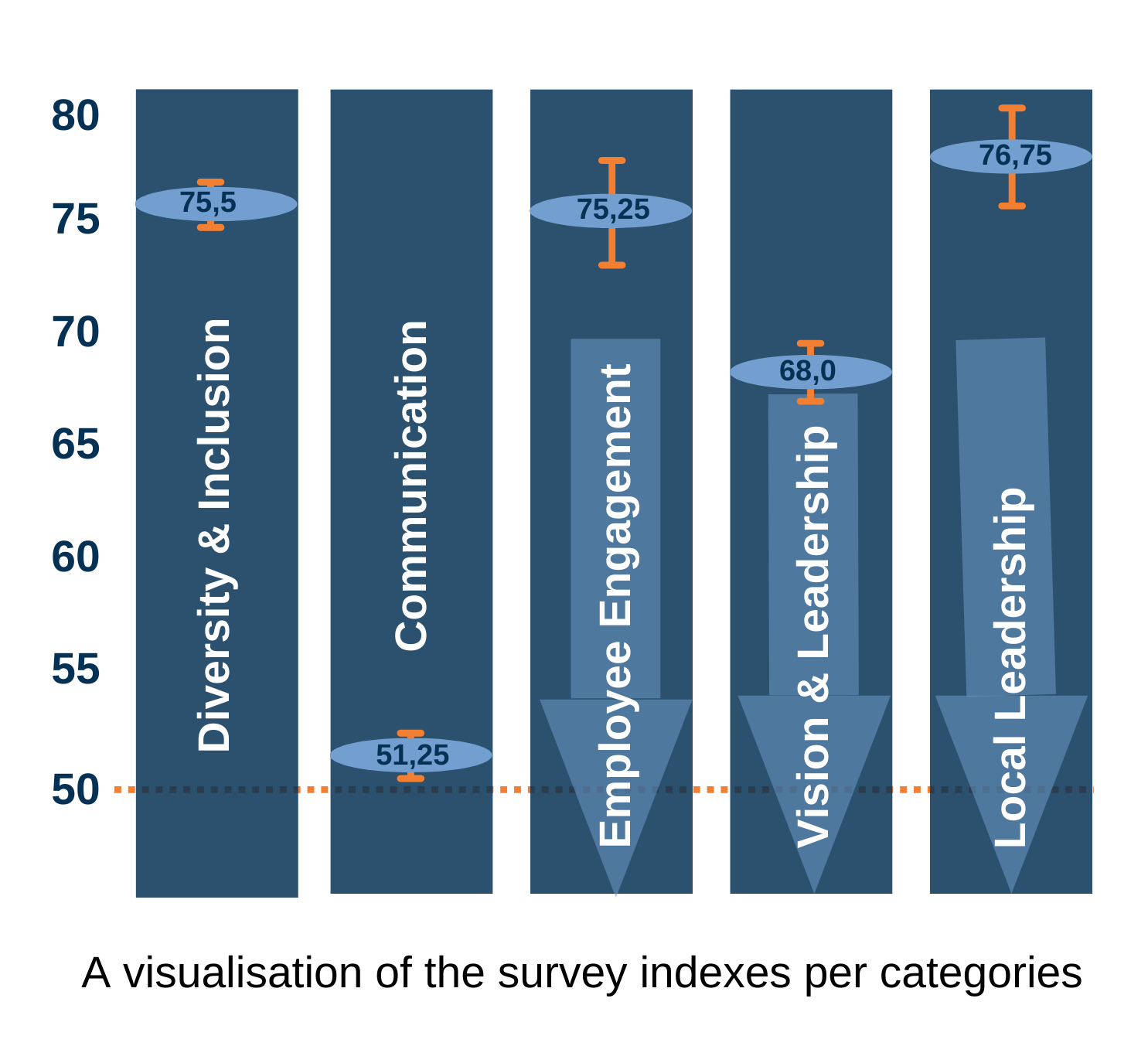

Not going into details of the index’s calculation, the results for all categories fall into a “good” range, i.e. >50. For sub-categories related to the self-esteem of employees, the results fall into a very high range between 80 and 91, that supports an assumption of the high level of motivation and engagement of the respondences. At first glance, the results are awesome, despite of the slight decrease tendencies for three of the five major categories.

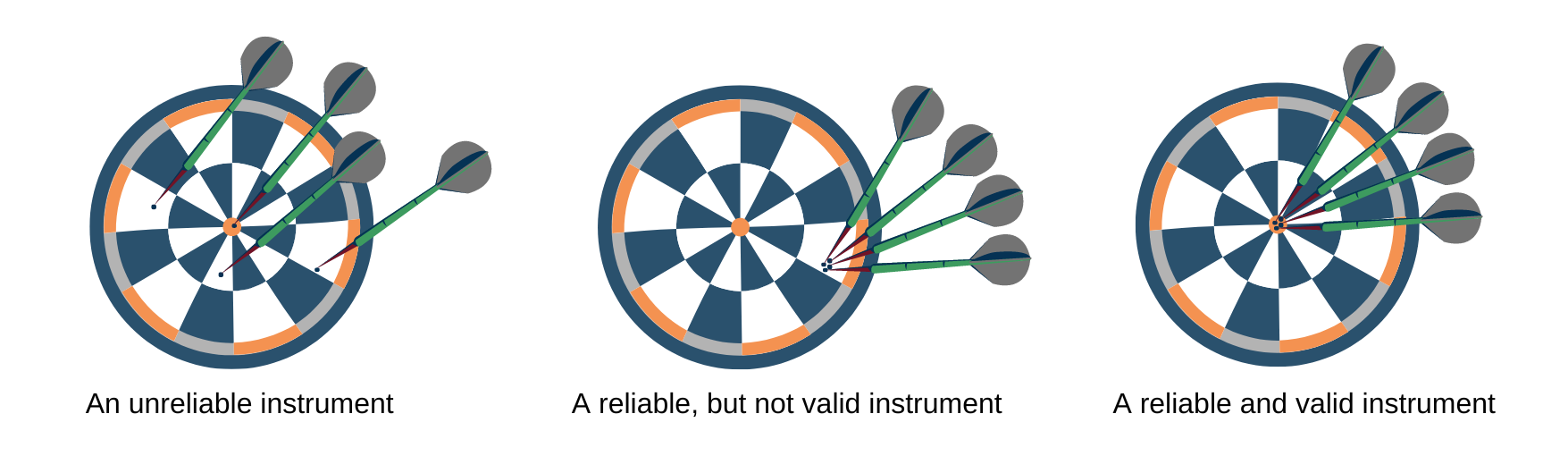

Let’s inspect what is behind the data and how the company can use the results. A properly designed and applied engagement survey could be a valuable tool to learn what works and what doesn’t work in a company, to develop and tune strategies to better serve business needs and any kind of change. At the same time, there are a lot of pitfalls in using any measuring instrument to get reliable and valid results. The basic idea of this statement can be presented by the well-known target-diagram:

The linchpin of the reliability of any measurable instrument is its design. Reliability means that we are measuring different aspects of a dedicated area. To reach a high level of reliability, it is not a simple task and requires not only knowledge and design skills but also a comprehensive testing activities on a big heterogeneous population. Therefore, for companies with limited design capabilities, it makes sense to consider offers of external providers, rather than develop their own engagement survey. A reliability of a tool is the first pivotal element, without it any follow-up activities turn to pure guessing instead of parsing. In our case, the company has used an engagement survey offered by an external provider and the consistent and narrow spreads of values for sub-categories collaterally support an assumption of its reliability.

The second core element of any measurable instrument is its validity. It is a confirmation that we measure what was intended to be measured. Undoubtedly, a design contributes to validity as well, however, a negligent application is a common reason for getting skewed results of a reliable and valid tool. As a consequence, a company spends a lot of money and efforts on wrong activities which do not yield the expected results, if any at all. In our case the company fell into this trap and has a mismatched feeling of the reality and a survey-based analytics.

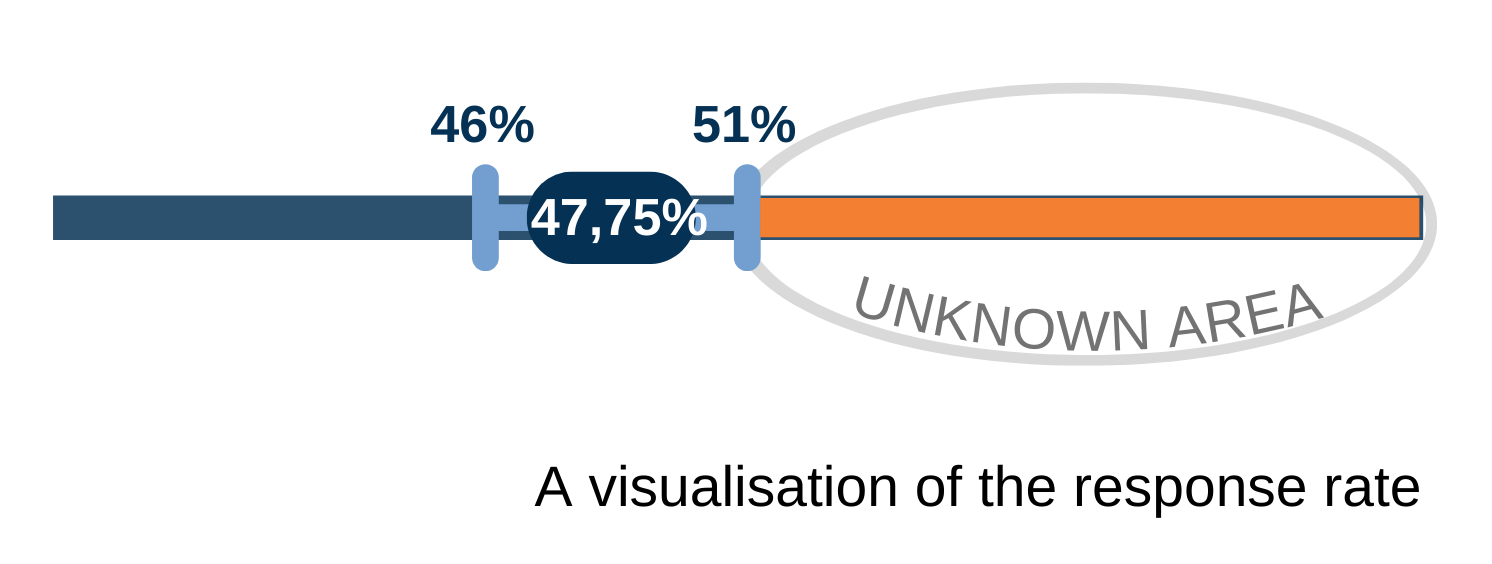

One of the most critical points for any statistical analysis is a selection of the right sample, if it is not possible to collect data from the entire population. Mistakes in sampling unavoidably lead to biased results. In an organization context with the known and limited population size, all employees have to be included into an engagement survey sample to get valid results of analysis. In the company’s case, the average response rate is low (<50%). It means that most of the company’s employees did not participate in the survey and it does not allow to consider the survey results as valid ones for the whole company.

This is not a unique case, rather a typical one which I’ve encountered in my practice. To proceed further, the process owner should give himself an honest answer to the question: “What has this pulse-check been implemented in the company for?” If the main reason is following the dominant trend to have a fancy instrument and its results are not going to be transferred into actions, then everything is done and nice pictures with “the outstanding results” can be shown any time. If the primary interest lies in getting a true-shot of what is going on with the employees’ motivation and engagement in the company and design appropriate nudging actions of improvements, then the first area to work on is the tool’s validity through an increase of the participation rate. This is a daunting but doable task, where the employees are at the center!

Read this article on LinkedIn